Time Travel snowflake: The Ultimate Guide to Understand, Use & Get Started 101

By: Harsh Varshney | Published: January 13, 2022

Related Articles

To empower your business decisions with data, you need Real-Time High-Quality data from all of your data sources in a central repository. Traditional On-Premise Data Warehouse solutions have limited Scalability and Performance , and they require constant maintenance. Snowflake is a more Cost-Effective and Instantly Scalable solution with industry-leading Query Performance. It’s a one-stop-shop for Cloud Data Warehousing and Analytics, with full SQL support for Data Analysis and Transformations. One of the highlighting features of Snowflake is Snowflake Time Travel.

Table of Contents

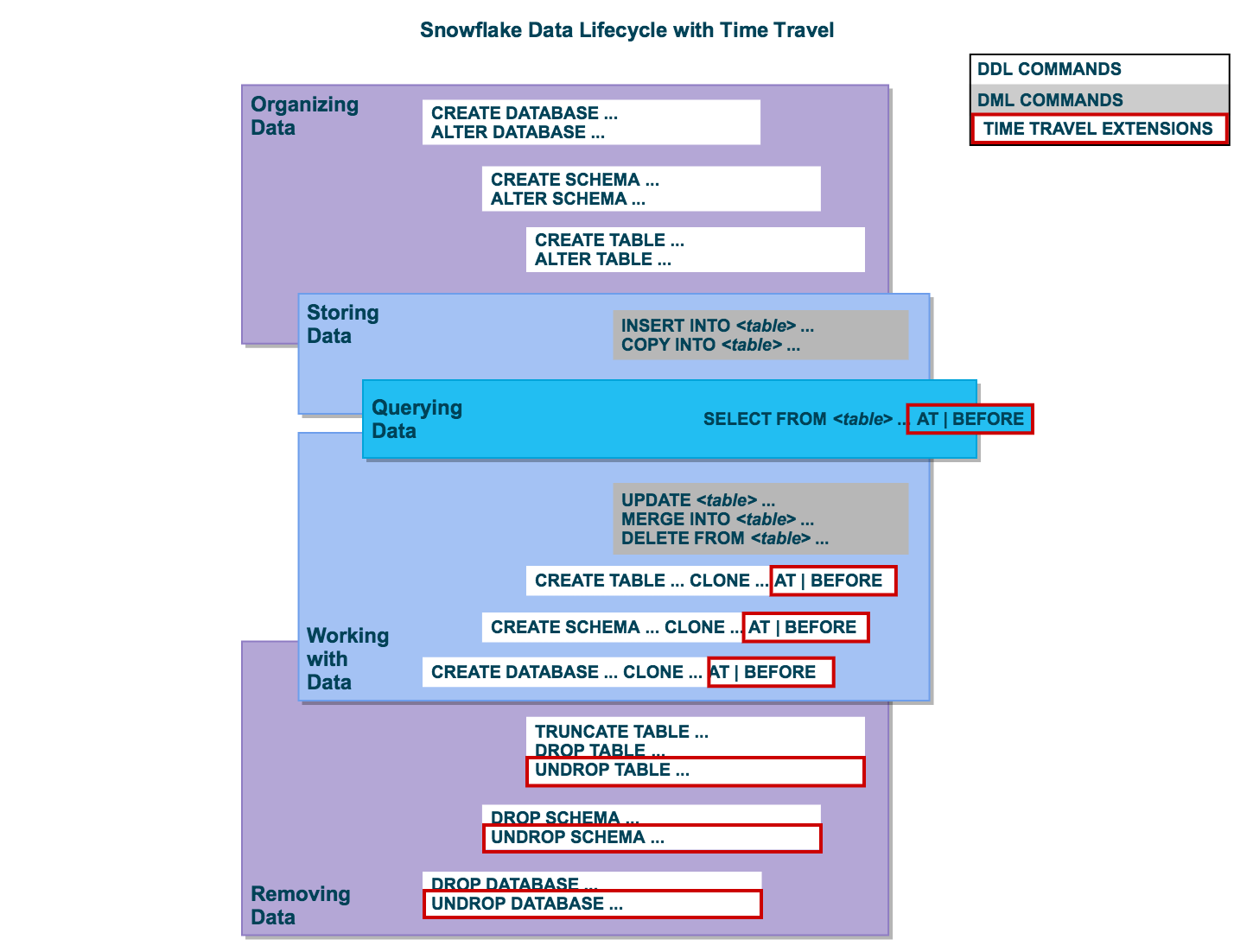

Snowflake Time Travel allows you to access Historical Data (that is, data that has been updated or removed) at any point in time. It is an effective tool for doing the following tasks:

- Restoring Data-Related Objects (Tables, Schemas, and Databases) that may have been removed by accident or on purpose.

- Duplicating and Backing up Data from previous periods of time.

- Analyzing Data Manipulation and Consumption over a set period of time.

In this article, you will learn everything about Snowflake Time Travel along with the process which you might want to carry out while using it with simple SQL code to make the process run smoothly.

What is Snowflake?

Snowflake is the world’s first Cloud Data Warehouse solution, built on the customer’s preferred Cloud Provider’s infrastructure (AWS, Azure, or GCP) . Snowflake (SnowSQL) adheres to the ANSI Standard and includes typical Analytics and Windowing Capabilities. There are some differences in Snowflake’s syntax, but there are also some parallels.

Snowflake’s integrated development environment (IDE) is totally Web-based . Visit XXXXXXXX.us-east-1.snowflakecomputing.com. You’ll be sent to the primary Online GUI , which works as an IDE, where you can begin interacting with your Data Assets after logging in. Each query tab in the Snowflake interface is referred to as a “ Worksheet ” for simplicity. These “ Worksheets ,” like the tab history function, are automatically saved and can be viewed at any time.

Key Features of Snowflake

- Query Optimization: By using Clustering and Partitioning, Snowflake may optimize a query on its own. With Snowflake, Query Optimization isn’t something to be concerned about.

- Secure Data Sharing: Data can be exchanged securely from one account to another using Snowflake Database Tables, Views, and UDFs.

- Support for File Formats: JSON, Avro, ORC, Parquet, and XML are all Semi-Structured data formats that Snowflake can import. It has a VARIANT column type that lets you store Semi-Structured data.

- Caching: Snowflake has a caching strategy that allows the results of the same query to be quickly returned from the cache when the query is repeated. Snowflake uses permanent (during the session) query results to avoid regenerating the report when nothing has changed.

- SQL and Standard Support: Snowflake offers both standard and extended SQL support, as well as Advanced SQL features such as Merge, Lateral View, Statistical Functions, and many others.

- Fault Resistant: Snowflake provides exceptional fault-tolerant capabilities to recover the Snowflake object in the event of a failure (tables, views, database, schema, and so on).

To get further information check out the official website here .

What is Snowflake Time Travel Feature?

Snowflake Time Travel is an interesting tool that allows you to access data from any point in the past. For example, if you have an Employee table, and you inadvertently delete it, you can utilize Time Travel to go back 5 minutes and retrieve the data. Snowflake Time Travel allows you to Access Historical Data (that is, data that has been updated or removed) at any point in time. It is an effective tool for doing the following tasks:

- Query Data that has been changed or deleted in the past.

- Make clones of complete Tables, Schemas, and Databases at or before certain dates.

- Tables, Schemas, and Databases that have been deleted should be restored.

As the ability of businesses to collect data explodes, data teams have a crucial role to play in fueling data-driven decisions. Yet, they struggle to consolidate the data scattered across sources into their warehouse to build a single source of truth. Broken pipelines, data quality issues, bugs and errors, and lack of control and visibility over the data flow make data integration a nightmare.

1000+ data teams rely on Hevo’s Data Pipeline Platform to integrate data from over 150+ sources in a matter of minutes. Billions of data events from sources as varied as SaaS apps, Databases, File Storage and Streaming sources can be replicated in near real-time with Hevo’s fault-tolerant architecture. What’s more – Hevo puts complete control in the hands of data teams with intuitive dashboards for pipeline monitoring, auto-schema management, custom ingestion/loading schedules.

All of this combined with transparent pricing and 24×7 support makes us the most loved data pipeline software on review sites.

Take our 14-day free trial to experience a better way to manage data pipelines.

How to Enable & Disable Snowflake Time Travel Feature?

1) enable snowflake time travel.

To enable Snowflake Time Travel, no chores are necessary. It is turned on by default, with a one-day retention period . However, if you want to configure Longer Data Retention Periods of up to 90 days for Databases, Schemas, and Tables, you’ll need to upgrade to Snowflake Enterprise Edition. Please keep in mind that lengthier Data Retention necessitates more storage, which will be reflected in your monthly Storage Fees. See Storage Costs for Time Travel and Fail-safe for further information on storage fees.

For Snowflake Time Travel, the example below builds a table with 90 days of retention.

To shorten the retention term for a certain table, the below query can be used.

2) Disable Snowflake Time Travel

Snowflake Time Travel cannot be turned off for an account, but it can be turned off for individual Databases, Schemas, and Tables by setting the object’s DATA_RETENTION_TIME_IN_DAYS to 0.

Users with the ACCOUNTADMIN role can also set DATA_RETENTION_TIME_IN_DAYS to 0 at the account level, which means that by default, all Databases (and, by extension, all Schemas and Tables) created in the account have no retention period. However, this default can be overridden at any time for any Database, Schema, or Table.

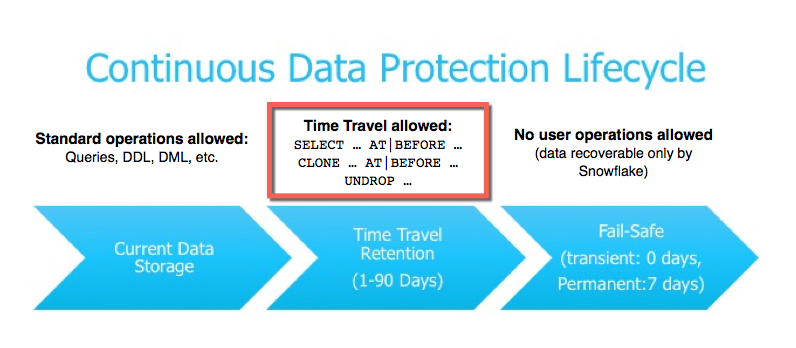

3) What are Data Retention Periods?

Data Retention Time is an important part of Snowflake Time Travel. Snowflake preserves the state of the data before the update when data in a table is modified, such as deletion of data or removing an object containing data. The Data Retention Period sets the number of days that this historical data will be stored, allowing Time Travel operations ( SELECT, CREATE… CLONE, UNDROP ) to be performed on it.

All Snowflake Accounts have a standard retention duration of one day (24 hours) , which is automatically enabled:

- At the account and object level in Snowflake Standard Edition , the Retention Period can be adjusted to 0 (or unset to the default of 1 day) (i.e. Databases, Schemas, and Tables).

- The Retention Period can be set to 0 for temporary Databases, Schemas, and Tables (or unset back to the default of 1 day ). The same can be said of Temporary Tables.

- The Retention Time for permanent Databases, Schemas, and Tables can be configured to any number between 0 and 90 days .

4) What are Snowflake Time Travel SQL Extensions?

The following SQL extensions have been added to facilitate Snowflake Time Travel:

- OFFSET (time difference in seconds from the present time)

- STATEMENT (identifier for statement, e.g. query ID)

- For Tables, Schemas, and Databases, use the UNDROP command.

How Many Days Does Snowflake Time Travel Work?

How to specify a custom data retention period for snowflake time travel .

The maximum Retention Time in Standard Edition is set to 1 day by default (i.e. one 24 hour period). The default for your account in Snowflake Enterprise Edition (and higher) can be set to any value up to 90 days :

- The account default can be modified using the DATA_RETENTION_TIME IN_DAYS argument in the command when creating a Table, Schema, or Database.

- If a Database or Schema has a Retention Period , that duration is inherited by default for all objects created in the Database/Schema.

The Data Retention Time can be set in the way it has been set in the example below.

Using manual scripts and custom code to move data into the warehouse is cumbersome. Frequent breakages, pipeline errors and lack of data flow monitoring makes scaling such a system a nightmare. Hevo’s reliable data pipeline platform enables you to set up zero-code and zero-maintenance data pipelines that just work.

- Reliability at Scale : With Hevo, you get a world-class fault-tolerant architecture that scales with zero data loss and low latency.

- Monitoring and Observability : Monitor pipeline health with intuitive dashboards that reveal every stat of pipeline and data flow. Bring real-time visibility into your ELT with Alerts and Activity Logs

- Stay in Total Control : When automation isn’t enough, Hevo offers flexibility – data ingestion modes, ingestion, and load frequency, JSON parsing, destination workbench, custom schema management, and much more – for you to have total control.

- Auto-Schema Management : Correcting improper schema after the data is loaded into your warehouse is challenging. Hevo automatically maps source schema with destination warehouse so that you don’t face the pain of schema errors.

- 24×7 Customer Support : With Hevo you get more than just a platform, you get a partner for your pipelines. Discover peace with round the clock “Live Chat” within the platform. What’s more, you get 24×7 support even during the 14-day full-feature free trial.

- Transparent Pricing : Say goodbye to complex and hidden pricing models. Hevo’s Transparent Pricing brings complete visibility to your ELT spend. Choose a plan based on your business needs. Stay in control with spend alerts and configurable credit limits for unforeseen spikes in data flow.

How to Modify Data Retention Period for Snowflake Objects?

When you alter a Table’s Data Retention Period, the new Retention Period affects all active data as well as any data in Time Travel. Whether you lengthen or shorten the period has an impact:

1) Increasing Retention

This causes the data in Snowflake Time Travel to be saved for a longer amount of time.

For example, if you increase the retention time from 10 to 20 days on a Table, data that would have been destroyed after 10 days is now kept for an additional 10 days before being moved to Fail-Safe. This does not apply to data that is more than 10 days old and has previously been put to Fail-Safe mode .

2) Decreasing Retention

- Temporal Travel reduces the quantity of time data stored.

- The new Shorter Retention Period applies to active data updated after the Retention Period was trimmed.

- If the data is still inside the new Shorter Period , it will stay in Time Travel.

- If the data is not inside the new Timeframe, it is placed in Fail-Safe Mode.

For example, If you have a table with a 10-day Retention Term and reduce it to one day, data from days 2 through 10 will be moved to Fail-Safe, leaving just data from day 1 accessible through Time Travel.

However, since the data is moved from Snowflake Time Travel to Fail-Safe via a background operation, the change is not immediately obvious. Snowflake ensures that the data will be migrated, but does not say when the process will be completed; the data is still accessible using Time Travel until the background operation is completed.

Use the appropriate ALTER <object> Command to adjust an object’s Retention duration. For example, the below command is used to adjust the Retention duration for a table:

How to Query Snowflake Time Travel Data?

When you make any DML actions on a table, Snowflake saves prior versions of the Table data for a set amount of time. Using the AT | BEFORE Clause, you can Query previous versions of the data.

This Clause allows you to query data at or immediately before a certain point in the Table’s history throughout the Retention Period . The supplied point can be either a time-based (e.g., a Timestamp or a Time Offset from the present) or a Statement ID (e.g. SELECT or INSERT ).

- The query below selects Historical Data from a Table as of the Date and Time indicated by the Timestamp:

- The following Query pulls Data from a Table that was last updated 5 minutes ago:

- The following Query collects Historical Data from a Table up to the specified statement’s Modifications, but not including them:

How to Clone Historical Data in Snowflake?

The AT | BEFORE Clause, in addition to queries, can be combined with the CLONE keyword in the Construct command for a Table, Schema, or Database to create a logical duplicate of the object at a specific point in its history.

Consider the following scenario:

- The CREATE TABLE command below generates a Clone of a Table as of the Date and Time indicated by the Timestamp:

- The following CREATE SCHEMA command produces a Clone of a Schema and all of its Objects as they were an hour ago:

- The CREATE DATABASE command produces a Clone of a Database and all of its Objects as they were before the specified statement was completed:

Using UNDROP Command with Snowflake Time Travel: How to Restore Objects?

The following commands can be used to restore a dropped object that has not been purged from the system (i.e. the item is still visible in the SHOW object type> HISTORY output):

- UNDROP DATABASE

- UNDROP TABLE

- UNDROP SCHEMA

UNDROP returns the object to its previous state before the DROP command is issued.

A Database can be dropped using the UNDROP command. For example,

Similarly, you can UNDROP Tables and Schemas .

Snowflake Fail-Safe vs Snowflake Time Travel: What is the Difference?

In the event of a System Failure or other Catastrophic Events , such as a Hardware Failure or a Security Incident, Fail-Safe ensures that Historical Data is preserved . While Snowflake Time Travel allows you to Access Historical Data (that is, data that has been updated or removed) at any point in time.

Fail-Safe mode allows Snowflake to recover Historical Data for a (non-configurable) 7-day period . This time begins as soon as the Snowflake Time Travel Retention Period expires.

This article has exposed you to the various Snowflake Time Travel to help you improve your overall decision-making and experience when trying to make the most out of your data. In case you want to export data from a source of your choice into your desired Database/destination like Snowflake , then Hevo is the right choice for you!

However, as a Developer, extracting complex data from a diverse set of data sources like Databases, CRMs, Project management Tools, Streaming Services, and Marketing Platforms to your Database can seem to be quite challenging. If you are from non-technical background or are new in the game of data warehouse and analytics, Hevo can help!

Hevo will automate your data transfer process, hence allowing you to focus on other aspects of your business like Analytics, Customer Management, etc. Hevo provides a wide range of sources – 150+ Data Sources (including 40+ Free Sources) – that connect with over 15+ Destinations. It will provide you with a seamless experience and make your work life much easier.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand.

You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Harsh comes with experience in performing research analysis who has a passion for data, software architecture, and writing technical content. He has written more than 100 articles on data integration and infrastructure.

No-code Data Pipeline for Snowflake

- Snowflake Commands

Hevo - No Code Data Pipeline

Continue Reading

Shuchi Chitrakar

The Ultimate Guide to Master Snowflake Data Lineage

Radhika Sarraf

Firebolt vs BigQuery: 6 Comprehensive Differences

Nishant Tandon

Firebolt AWS Redshift Comparison: 6 Critical Differences

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

I want to read this e-book

Panic hits when you mistakenly delete data. Problems can come from a mistake that disrupts a process, or worse, the whole database was deleted. Thoughts of how recent was the last backup and how much time will be lost might have you wishing for a rewind button. Straightening out your database isn't a disaster to recover from with Snowflake's Time Travel. A few SQL commands allow you to go back in time and reclaim the past, saving you from the time and stress of a more extensive restore.

We'll get started in the Snowflake web console, configure data retention, and use Time Travel to retrieve historic data. Before querying for your previous database states, let's review the prerequisites for this guide.

Prerequisites

- Quick Video Introduction to Snowflake

- Snowflake Data Loading Basics Video

What You'll Learn

- Snowflake account and user permissions

- Make database objects

- Set data retention timelines for Time Travel

- Query Time Travel data

- Clone past database states

- Remove database objects

- Next options for data protection

What You'll Need

- A Snowflake Account

What You'll Build

- Create database objects with Time Travel data retention

First things first, let's get your Snowflake account and user permissions primed to use Time Travel features.

Create a Snowflake Account

Snowflake lets you try out their services for free with a trial account . A Standard account allows for one day of Time Travel data retention, and an Enterprise account allows for 90 days of data retention. An Enterprise account is necessary to practice some commands in this tutorial.

Login and Setup Lab

Log into your Snowflake account. You can access the SQL commands we will execute throughout this lab directly in your Snowflake account by setting up your environment below:

Setup Lab Environment

This will create worksheets containing the lab SQL that can be executed as we step through this lab.

Once the lab has been setup, it can be continued by revisiting the lab details page and clicking Continue Lab

or by navigating to Worksheets and selecting the Getting Started with Time Travel folder.

Increase Your Account Permission

Snowflake's web interface has a lot to offer, but for now, switch the account role from the default SYSADMIN to ACCOUNTADMIN . You'll need this increase in permissions later.

Now that you have the account and user permissions needed, let's create the required database objects to test drive Time Travel.

Within the Snowflake web console, navigate to Worksheets and use the ‘Getting Started with Time Travel' Worksheets we created earlier.

Create Database

Use the above command to make a database called ‘timeTravel_db'. The Results output will show a status message of Database TIMETRAVEL_DB successfully created .

Create Table

This command creates a table named ‘timeTravel_table' on the timeTravel_db database. The Results output should show a status message of Table TIMETRAVEL_TABLE successfully created .

With the Snowflake account and database ready, let's get down to business by configuring Time Travel.

Be ready for anything by setting up data retention beforehand. The default setting is one day of data retention. However, if your one day mark passes and you need the previous database state back, you can't retroactively extend the data retention period. This section teaches you how to be prepared by preconfiguring Time Travel retention.

Alter Table

The command above changes the table's data retention period to 55 days. If you opted for a Standard account, your data retention period is limited to the default of one day. An Enterprise account allows for 90 days of preservation in Time Travel.

Now you know how easy it is to alter your data retention, let's bend the rules of time by querying an old database state with Time Travel.

With your data retention period specified, let's turn back the clock with the AT and BEFORE clauses .

Use timestamp to summon the database state at a specific date and time.

Employ offset to call the database state at a time difference of the current time. Calculate the offset in seconds with math expressions. The example above states, -60*5 , which translates to five minutes ago.

If you're looking to restore a database state just before a transaction occurred, grab the transaction's statement id. Use the command above with your statement id to get the database state right before the transaction statement was executed.

By practicing these queries, you'll be confident in how to find a previous database state. After locating the desired database state, you'll need to get a copy by cloning in the next step.

With the past at your fingertips, make a copy of the old database state you need with the clone keyword.

Clone Table

The command above creates a new table named restoredTimeTravel_table that is an exact copy of the table timeTravel_table from five minutes prior.

Cloning will allow you to maintain the current database while getting a copy of a past database state. After practicing the steps in this guide, remove the practice database objects in the next section.

You've created a Snowflake account, made database objects, configured data retention, query old table states, and generate a copy of the old table state. Pat yourself on the back! Complete the steps to this tutorial by deleting the objects created.

By dropping the table before the database, the retention period previously specified on the object is honored. If a parent object(e.g., database) is removed without the child object(e.g., table) being dropped prior, the child's data retention period is null.

Drop Database

With the database now removed, you've completed learning how to call, copy, and erase the past.

Getting Started with Snowflake: Using Time Travel & the SnowSQL CLI

- 13 videos | 1h 25m 59s

- Includes Assessment

- Earns a Badge

WHAT YOU WILL LEARN

In this course.

- Playable 1. Course Overview 52s FREE ACCESS

- Playable 2. The Phases of Snowflake Data Storage 2m 54s FREE ACCESS

- Locked 3. Configuring a Table for Time Travel 7m 1s FREE ACCESS

- Locked 4. Using Query IDs and Time Offsets for Time Travel 7m 32s FREE ACCESS

- Locked 5. Cloning Historical Copies of Data Using Timestamps 10m 3s FREE ACCESS

- Locked 6. Restoring Dropped Tables 5m 36s FREE ACCESS

- Locked 7. Installing SnowSQL on Mac 10m 32s FREE ACCESS

- Locked 8. Installing SnowSQL on Windows 7m 29s FREE ACCESS

- Locked 9. Executing Queries from SnowSQL 8m 43s FREE ACCESS

- Locked 10. Running Queries in Source Files 5m 52s FREE ACCESS

- Locked 11. Defining and Using Variables 9m 39s FREE ACCESS

- Locked 12. Writing Out Query Results to Files 6m 50s FREE ACCESS

- Locked 13. Course Summary 2m 57s FREE ACCESS

EARN A DIGITAL BADGE WHEN YOU COMPLETE THIS COURSE

Skillsoft is providing you the opportunity to earn a digital badge upon successful completion on some of our courses, which can be shared on any social network or business platform.

YOU MIGHT ALSO LIKE

PEOPLE WHO VIEWED THIS ALSO VIEWED THESE

Querying Iceberg table data and performing time travel

To query an Iceberg dataset, use a standard SELECT statement like the following. Queries follow the Apache Iceberg format v2 spec and perform merge-on-read of both position and equality deletes.

To optimize query times, all predicates are pushed down to where the data lives.

Time travel and version travel queries

Each Apache Iceberg table maintains a versioned manifest of the Amazon S3 objects that it contains. Previous versions of the manifest can be used for time travel and version travel queries.

Time travel queries in Athena query Amazon S3 for historical data from a consistent snapshot as of a specified date and time. Version travel queries in Athena query Amazon S3 for historical data as of a specified snapshot ID.

Time travel queries

To run a time travel query, use FOR TIMESTAMP AS OF timestamp after the table name in the SELECT statement, as in the following example.

The system time to be specified for traveling is either a timestamp or timestamp with a time zone. If not specified, Athena considers the value to be a timestamp in UTC time.

The following example time travel queries select CloudTrail data for the specified date and time.

Version travel queries

To execute a version travel query (that is, view a consistent snapshot as of a specified version), use FOR VERSION AS OF version after the table name in the SELECT statement, as in the following example.

The version parameter is the bigint snapshot ID associated with an Iceberg table version.

The following example version travel query selects data for the specified version.

The FOR SYSTEM_TIME AS OF and FOR SYSTEM_VERSION AS OF clauses in Athena engine version 2 have been replaced by the FOR TIMESTAMP AS OF and FOR VERSION AS OF clauses in Athena engine version 3.

Retrieving the snapshot ID

You can use the Java SnapshotUtil class provided by Iceberg to retrieve the Iceberg snapshot ID, as in the following example.

Combining time and version travel

You can use time travel and version travel syntax in the same query to specify different timing and versioning conditions, as in the following example.

Creating and querying views with Iceberg tables

To create and query Athena views on Iceberg tables, use CREATE VIEW views as described in Working with views .

If you are interested in using the Iceberg view specification to create views, contact [email protected] .

Working with Lake Formation fine-grained access control

Athena engine version 3 supports Lake Formation fine-grained access control with Iceberg tables, including column level and row level security access control. This access control works with time travel queries and with tables that have performed schema evolution. For more information, see Lake Formation fine-grained access control and Athena workgroups .

If you created your Iceberg table outside of Athena, use Apache Iceberg SDK version 0.13.0 or higher so that your Iceberg table column information is populated in the AWS Glue Data Catalog. If your Iceberg table does not contain column information in AWS Glue, you can use the Athena ALTER TABLE SET PROPERTIES statement or the latest Iceberg SDK to fix the table and update the column information in AWS Glue.

To use the Amazon Web Services Documentation, Javascript must be enabled. Please refer to your browser's Help pages for instructions.

Thanks for letting us know we're doing a good job!

If you've got a moment, please tell us what we did right so we can do more of it.

Thanks for letting us know this page needs work. We're sorry we let you down.

If you've got a moment, please tell us how we can make the documentation better.

- Español – América Latina

- Português – Brasil

- Documentation

Data retention with time travel and fail-safe

This document describes time travel and fail-safe data retention for datasets. During the time travel and fail-safe periods, data that you have changed or deleted in any table in the dataset continues to be stored in case you need to recover it.

Time travel

You can access data from any point within the time travel window, which covers the past seven days by default. Time travel lets you query data that was updated or deleted, restore a table or dataset that was deleted, or restore a table that expired.

Configure the time travel window

You can set the duration of the time travel window, from a minimum of two days to a maximum of seven days. Seven days is the default. You set the time travel window at the dataset level, which then applies to all of the tables within the dataset.

You can configure the time travel window to be longer in cases where it is important to have a longer time to recover updated or deleted data, and to be shorter where it isn't required. Using a shorter time travel window lets you save on storage costs when using the physical storage billing model . These savings don't apply when using the logical storage billing model.

For more information on how the storage billing model affects cost, see Billing .

How the time travel window affects table and dataset recovery

A deleted table or dataset uses the time travel window duration that was in effect at the time of deletion.

For example, if you have a time travel window duration of two days and then increase the duration to seven days, tables deleted before that change are still only recoverable for two days. Similarly, if you have a time travel window duration of five days and you reduce that duration to three days, any tables that were deleted before the change are still recoverable for five days.

Because time travel windows are set at the dataset level, you can't change the time travel window of a deleted dataset until it is undeleted.

If you reduce the time travel window duration, delete a table, and then realize that you need a longer period of recoverability for that data, you can create a snapshot of the table from a point in time prior to the table deletion. You must do this while the deleted table is still recoverable. For more information, see Create a table snapshot using time travel .

Specify a time travel window

You can use the Google Cloud console, the bq command-line tool, or the BigQuery API to specify the time travel window for a dataset.

For instructions on how to specify the time travel window for a new dataset, see Create datasets .

For instructions on how to update the time travel window for an existing dataset, see Update time travel windows .

If the timestamp specifies a time outside time travel window, or from before the table was created, then the query fails and returns an error like the following:

Time travel and row-level access

If a table has, or has had, row-level access policies , then only a table administrator can access historical data for the table.

The following Identity and Access Management (IAM) permission is required:

The following BigQuery role provides the required permission:

The bigquery.rowAccessPolicies.overrideTimeTravelRestrictions permission can't be added to a custom role .

Run the following command to get the equivalent Unix epoch time:

Replace the UNIX epoch time 1691164834000 received from the previous command in the bq command-line tool. Run the following command to restore a copy of the deleted table deletedTableID in another table restoredTable , within the same dataset myDatasetID :

BigQuery provides a fail-safe period. During the fail-safe period, deleted data is automatically retained for an additional seven days after the time travel window, so that the data is available for emergency recovery. Data is recoverable at the table level. Data is recovered for a table from the point in time represented by the timestamp of when that table was deleted. The fail-safe period is not configurable.

You can't query or directly recover data in fail-safe storage. To recover data from fail-safe storage, contact Cloud Customer Care .

If you set your storage billing model to use physical bytes, the total storage costs you are billed for include the bytes used for time travel and fail-safe storage. Time travel and fail-safe storage are charged at the active physical storage rate. You can configure the time travel window to balance storage costs with your data retention needs.

If you set your storage billing model to use logical bytes, the total storage costs you are billed for don't include the bytes used for time travel or fail-safe storage.

The following table show a comparison of physical and logical storage costs:

If you use physical storage, you can see the bytes used by time travel and fail-safe by looking at the TIME_TRAVEL_PHYSICAL_BYTES and FAIL_SAFE_PHYSICAL_BYTES columns in the TABLE_STORAGE and TABLE_STORAGE_BY_ORGANIZATION views. For an example of how to use one of these views to estimate your costs, see Forecast storage billing .

Physical storage example

The following table shows how deleted data is billed when you are using physical storage. This example shows a situation where the total active physical storage is 200 GiB and then 50 GiB is deleted, and the time travel window is seven days:

Deleting data from long-term physical storage works in the same way.

Limitations

- Time travel only provides access to historical data for the duration of the time travel window. To preserve table data for non-emergency purposes for longer than the time travel window, use table snapshots .

- If a table has, or has previously had, row-level access policies, then time travel can only be used by table administrators. For more information, see Time travel and row-level access .

- Time travel does not restore table metadata.

What's next

- Learn how to query and recover time travel data .

- Learn more about table snapshots .

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2024-05-07 UTC.

Apache Iceberg Cookbook

Introduction, getting started, data engineering, data operations, migrating to iceberg, time travel queries.

This recipe demonstrates ways to query historical snapshots of Apache Iceberg tables.

Time travel to query historical snapshots in Iceberg tables

Every change to an Iceberg table creates an independent version of the metadata tree, called a snapshot. As they are replaced, historical snapshots aren’t deleted immediately because there could be long-running jobs still reading from them. Snapshots are kept for 5 days by default. To learn how to customize snapshot retention, see the recipe on snapshot expiration .

Before snapshots expire, you can still read them using time travel queries. Time travel queries are supported in Apache Spark and Trino using the SQL clauses FOR VERSION AS OF and FOR TIMESTAMP AS OF .

When you use FOR VERSION AS OF , Iceberg loads the snapshot specified by the snapshot ID. To see what snapshots are available, query the snapshots metadata table. You can also use this syntax to read from a tag or a branch by name.

When you use FOR TIMESTAMP AS OF , Iceberg loads the snapshot that was current at that time, based on the table history. You can inspect table history by querying the history metadata table.

Time travel to a specific time is convenient, but isn’t guaranteed to use the same snapshot that was read by a given job. Because of clock skew, the timestamp of, say, a log message from one node might be inconsistent with the timestamp another node used to create the commit. When using time travel to reproduce a read, it is best to probe the reader’s log to identify the snapshot ID that was used.

Time travel with SQL examples

Apache Iceberg provides versioning and time travel capabilities, allowing users to query data as it existed at a specific point in time. This feature can be extremely useful for debugging, auditing, and historical analysis.

Time travel in Iceberg allows users to access historical snapshots of their table. Snapshots are created whenever a table is modified, such as adding or deleting data, and are assigned unique identifiers. Each snapshot is a consistent and complete view of the table at a given point in time.

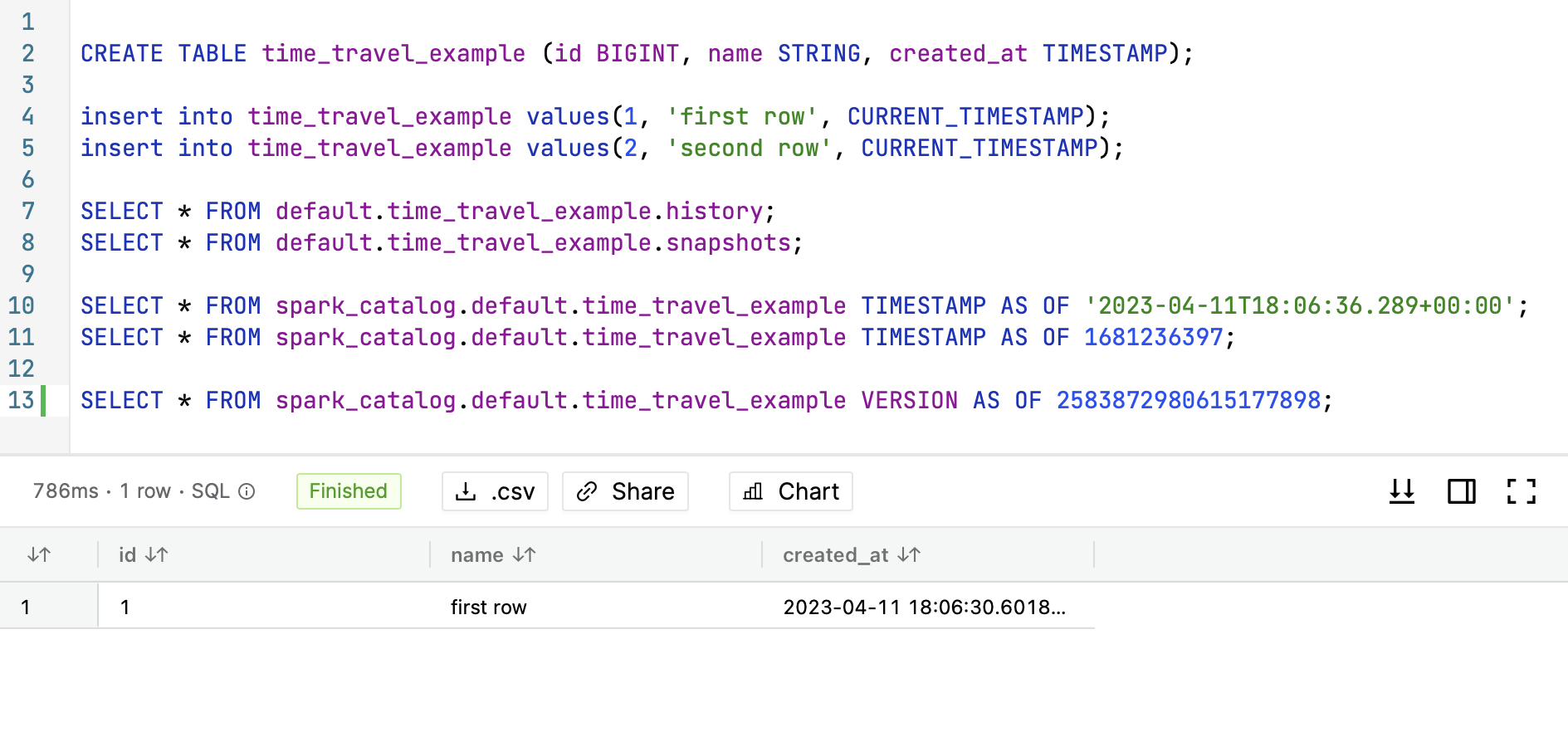

Below we will provide SQL example on how to use time travel in IOMETE platform.

Prepare sample data

Now insert first row into the table:

Wait couple minutes before inserting next row, so we can see the difference in timestamps.

Let's insert the second row into our table:

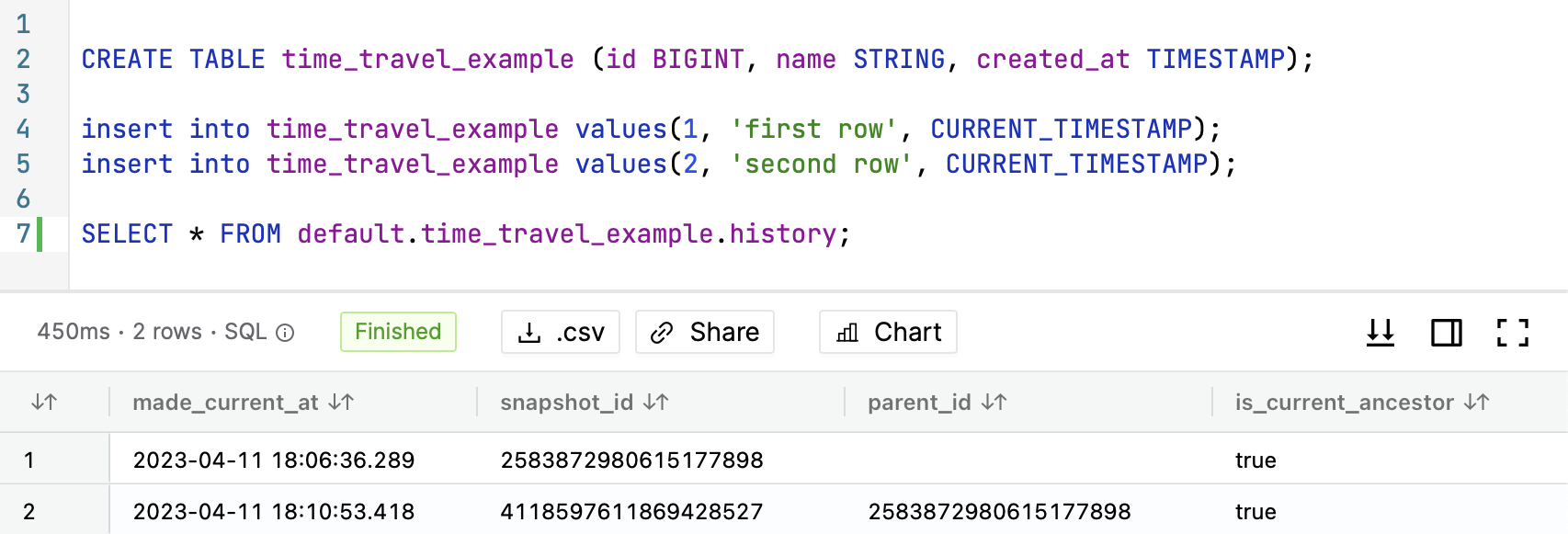

Review history

To query a table at a specific snapshot, you will need the snapshot ID. You can find it from the history table. Now let's review the history of our table and see how many snapshots we have. We can do this by running the following query:

The history table is a special table that is automatically created for each table in Iceberg. It contains information about all snapshots of the table. To query history of the table you need to provide database name and table name in the following format: database.table.history .

Result of the query will look like this:

As you can see we have two snapshots. Additionally you can get more details about snapshots by running the following query:

Time travel

Iceberg provides three ways to query data at a specific time.

- Using TIMESTAMP

- Using snapshot ID

- Using named reference (Branch or Tag)

You can run either of the queries below to query data:

All queries above will return only 1 result

Using named references

You can also use named references (branches and tags) to query data at a specific time. In the example below we will create a tag and then use it to query data.

More about branches and tags in the What's next section below (Soon...).

What's next?

Iceberg docs.

Check Apache Iceberg documentation for more information

ON THIS PAGE

- Prepare sample data

- Review history

- Time travel

- Using named references

- What's next?

The 2025 Real ID deadline for new licenses is really real this time, DHS says

If you plan on flying around the country in 2025 and beyond, you might want to listen up.

You have about 365 days to make your state-issued driver’s license or identification “Real ID” compliant, per the Department of Homeland Security.

The Real ID compliance is part of a larger act passed by Congress in 2005 to set “minimum security standards” for the distribution of identification materials, including driver’s licenses. This means that certain federal agencies, like the Transportation Security Administration or DHS, won’t be able to accept state-issued forms of identification without the Real ID seal.

It's taken a while for the compliance to stick, with DHS originally giving a 2020 deadline before pushing it back a year, then another two years and another two years after that due to “backlogged transactions” at MVD offices nationwide, according to previous USA TODAY reports.

You won’t be able to board federally regulated commercial aircraft, enter nuclear power plants, or access certain facilities if your identification documents aren’t Real ID compliant by May 7, 2025.

Learn more: Best travel insurance

Here’s what we know about Real IDs, including where to get one and why you should think about getting one.

Do I have to get a Real ID?

Not necessarily.

If you have another form of identification that TSA accepts, there probably isn’t an immediate reason to obtain one, at least for travel purposes. But if you don’t have another form of identification and would like to travel around the country in the near future, you should try to obtain one.

Here are all the other TSA-approved forms of identification:

◾ State-issued Enhanced Driver’s License

◾ U.S. passport

◾ U.S. passport card

◾ DHS trusted traveler cards (Global Entry, NEXUS, SENTRI, FAST)

◾ U.S. Department of Defense ID, including IDs issued to dependents

◾ Permanent resident card

◾ Border crossing card

◾ An acceptable photo ID issued by a federally recognized , Tribal Nation/Indian Tribe

◾ HSPD-12 PIV card

◾ Foreign government-issued passport

◾ Canadian provincial driver's license or Indian and Northern Affairs Canada card

◾ Transportation worker identification credential

◾ U.S. Citizenship and Immigration Services Employment Authorization Card (I-766)

◾ U.S. Merchant Mariner Credential

◾ Veteran Health Identification Card (VHIC)

However, federal agencies “may only accept” state-issued driver’s licenses or identification cards that are Real ID compliant if you are trying to gain access to a federal facility. That includes TSA security checkpoints.

Enhanced driver’s licenses, only issued by Washington, Michigan, Minnesota, New York, and Vermont, are considered acceptable alternatives to REAL ID-compliant cards, according to DHS.

What can I use my Real ID for?

For most people, it's all about boarding flights.

You can only use your Real ID card to obtain access to "nuclear power plants, access certain facilities, or board federally regulated commercial aircrafts," according to DHS.

The cards can't be used to travel across any border, whether that's Canada, Mexico, or any other international destination, according to DHS.

How do I get a Real ID? What does a Real ID look like?

All you have to do to get a Real ID is to make time to head over to your local department of motor vehicles.

Every state is different, so the documents needed to verify your identity will vary. DHS says that at minimum, you will be asked to produce your full legal name, date of birth, social security number, two proofs of address of principal residence and lawful status.

The only difference between the state-issued forms of identification you have now and the Real ID-compliant card you hope to obtain is a unique marking stamped in the right-hand corner. The mark stamped on your Real ID compliant cards depends on the state.

- Vote In Our Poll

- Photo Galleries

- Election 2024

- This Week in the 904

- Action Button Links

- Newsletter Sign-up (Opens in new window)

- Clark Howard

- Watch Live: Action News Jax NOW

- Action Sports Jax 24/7

- Action News Jax 24/7 News

- Weather 24/7 Stream

- Social Media

- What's on CBS47 & FOX30

- The $pend $mart Stream

- Law & Crime

- Curiosity NOW

- Eclipse 2024

- Dog Walk Forecast

- First Alert Neighborhood Weather Stations

- Hurricane Center

- Talking the Tropics

- First Alert Doppler HD

- Interactive Radar

- First Alert Wx App (Opens in new window)

- Hour by Hour

- 7-Day Forecast

- Weekend Forecast

- Buresh Blog

- Sunrise & Sunset Shots

- Share Your Pics!

- Priced Out Of Jax

- Restaurant Report

- Jacksonville Jaguars

- College Sports

- THE PLAYERS Championship

- Friday Night Blitz

- Tenikka's Books for Kids

- Community Calendar

- Steals and Deals

- Gas Prices (Opens in new window)

- FlightAware (Opens in new window)

- Telemundo Jacksonville

- Action News Jax Team

- MeTV on MyTVJax (Opens in new window)

- Jobs at Cox Media Group (Opens in new window)

- Submit Events

- Visitor Agreement

- Privacy Policy

- Local Solutions (Opens in new window)

- Jax Home Experts

- Around Town

Action News Jax Now

Real ID deadline looming. How you can get yours in time to fly in 2025

JACKSONVILLE, Fla. — Travelers can expect some major changes to what documents they need to fly.

On May 7, 2025, the enforcement of “Real IDs” will begin, which means you have 1 year to make sure you’ve got the documents you need to travel.

>>> STREAM ACTION NEWS JAX LIVE <<<

So, what is a Real ID, and what do you need to get one?

A traditional driver’s license will no longer be accepted by TSA or U.S. Border Patrol.

Instead, anyone 18 or older will need to present either a passport or a document known as a Real ID, also known as an enhanced license.

You’ll also need a Real ID to enter a federal building.

Following the 9/11 attacks, the federal government set new security standards to obtain state-issued driver’s licenses and ID cards, and some states began issuing them more than a decade ago.

Read: Very hot day ahead with some rain relief in view

What do you need to obtain a Real ID?

- Some states may require proof of Social Security.

- Some states may require 2 proofs of residency, like a utility bill or bank statement.

- Proof of status will be required, such as a passport, a U.S. birth certificate or a permanent resident card.

- Proof of citizenship is required if you weren’t originally a U.S. citizen.

Read: JSO: Woman shot through apartment by unknown suspect at Pottsburg Creek Apartments

[SIGN UP: Action News Jax Daily Headlines Newsletter ]

Click here to download the free Action News Jax news and weather apps, click here to download the Action News Jax Now app for your smart TV and click here to stream Action News Jax live.

:quality(70)/cloudfront-us-east-1.images.arcpublishing.com/cmg/4TVV43N5LREXJAXDBYQEOYO7PI.jpg)

Florida women complete record-breaking journey from Jacksonville to Key West in motorized toy cars

:quality(70)/cloudfront-us-east-1.images.arcpublishing.com/cmg/PZGD6VCNKRAQJELPWWSNUHJVGU.jpg)

Google to fund guaranteed income program that gives 225 families $1,000 a month

:quality(70)/cloudfront-us-east-1.images.arcpublishing.com/cmg/F5BX6N3J55DONHR53KX5DJRPIE.png)

TRAFFIC ALERT: First Coast Expressway project to cause road closures in Clay County

:quality(70)/cloudfront-us-east-1.images.arcpublishing.com/cmg/45C7MGY2QNABJOYSRLSNFYC2NY.jpeg)

Open to the public; ATVs, dump trucks, cars and more at St. Johns County surplus auction

:quality(70)/cloudfront-us-east-1.images.arcpublishing.com/cmg/IO3SRELULFHSFLE7WGM5DKBKCY.png)

Naval Station Mayport reopens to traffic after ‘telephonic threat’ found to be not credible

IMAGES

VIDEO

COMMENTS

Analyzing data usage/manipulation over specified periods of time. Introduction to Time Travel¶ Using Time Travel, you can perform the following actions within a defined period of time: Query data in the past that has since been updated or deleted. Create clones of entire tables, schemas, and databases at or before specific points in the past.

The Time Travel feature allows database administrators to query historical data, clone old tables, and restore objects dropped in the past. However, given the massive sizes databases can grow into, Time Travel is not a drop-in equivalent of code version control. Every time a table is modified (deleted or updated), Snowflake takes a snapshot of ...

This video demonstrates how Snowflake's Time Travel function can be used in conjunction to a specific Query ID.

How to Query Snowflake Time Travel Data? When you make any DML actions on a table, Snowflake saves prior versions of the Table data ... a Timestamp or a Time Offset from the present) or a Statement ID (e.g. SELECT or INSERT). The query below selects Historical Data from a Table as of the Date and Time indicated by the Timestamp: select * from ...

To query historical data using Time Travel in DbVisualizer, you can follow these steps: ... (2 change_id INT AUTOINCREMENT PRIMARY KEY, 3 user_id STRING, 4 change_description STRING, 5 change ...

Specifies the difference in seconds from the current time to use for Time Travel, in the form -N where N can be an integer or arithmetic expression (e.g. -120 is 120 seconds, -30*60 is 1800 seconds or 30 minutes). STATEMENT => id. Specifies the query ID of a statement to use as the reference point for Time Travel.

you specify a query ID. Time travel will return the data before the specified query was executed. Let's try the last option (you can read more about finding the query ID in the blog post Cool Stuff in Snowflake - Part 6: Query History): We can also use an offset, which is measured in seconds. In the example below we go 8 minutes back in time:

Within the Snowflake web console, navigate to and use the 'Getting Started with Time Travel' Worksheets we created earlier. Use the above command to make a database called 'timeTravel_db'. The output will show a status message of . This command creates a table named 'timeTravel_table' on the timeTravel_db database.

In this course, learn how to use Time Travel to access historical data using query ID filters, relative time offsets, and absolute timestamp values. Next, practice cloning historical copies of tables into current tables and restoring dropped tables with the UNDROP command. Finally, discover how to install SnowSQL, define and use variables, and ...

However, I see relying on snowflake variables as ephemeral and would rather put this into a python variable; at the very least, if I wanted to time travel a day after the query is executed and the snowflake connection has been closed, I'll need some way to pass the query_id back in to execute: snowflake_cursor.execute(""". select last_query_id();

To query an Iceberg dataset, use a standard SELECT statement like the following. Queries follow the Apache Iceberg format v2 spec and perform merge-on-read of both position and equality deletes. SELECT * FROM [ db_name .] table_name [ WHERE predicate] To optimize query times, all predicates are pushed down to where the data lives.

Time Travel is a very powerful feature that allows: Query data in the past that has since been updated or deleted. Create clones of entire tables, schemas, and databases at or before specific ...

During the fail-safe period, deleted data is automatically retained for an additional seven days after the time travel window, so that the data is available for emergency recovery. Data is recoverable at the table level. Data is recovered for a table from the point in time represented by the timestamp of when that table was deleted.

In fact, you'll see the syntax to query with time travel is fairly the same as in SQL Server. The biggest difference however is SQL Server stores all the versions indefinitely, while Snowflake only maintains the different versions of your table for a specific period in time. Depending on your edition, this is either one day or up to 90 days.

Time travel queries are supported in Apache Spark and Trino using the SQL clauses FOR VERSION AS OF and FOR TIMESTAMP AS OF. When you use FOR VERSION AS OF, Iceberg loads the snapshot specified by the snapshot ID. To see what snapshots are available, query the snapshots metadata table. You can also use this syntax to read from a tag or a branch ...

Iceberg provides versioning and time travel capabilities, allowing users to query data as it existed at a specific point in time. ... Iceberg provides three ways to query data at a specific time. Using TIMESTAMP; Using snapshot ID; Using named reference (Branch or Tag) ... -- time travel to snapshot with id 2583872980615177898

If you plan on flying around the country in 2025 and beyond, you might want to listen up. You have about 365 days to make your state-issued driver's license or identification "Real ID ...

You can use a query like this to get this information from the account_usage schema: select bytes_scanned, total_elapsed_time, query_id from snowflake.account_usage.query_history. where query_id = 'YOUR_QUERY_ID'. answered Mar 6, 2023 at 9:06. Alexander Klimenko.

Following the 9/11 attacks, the federal government set new security standards to obtain state-issued driver's licenses and ID cards, and some states began issuing them more than a decade ago.